My Role

In this project, I had to wear multiple hats, serving as both a product owner and a lead designer. My responsibilities were diverse and crucial to the success of the Keystone project.

As a product owner, I collaborated closely with the business and development team to refine goals and ensure the new prefab system was accurate and what the customer was asking for. I was responsible for tracking backlog items and prioritizing features based on user needs and business objectives. I was not the only person working on this but as it was large effort and the use case were substantial so it was all hands on deck.

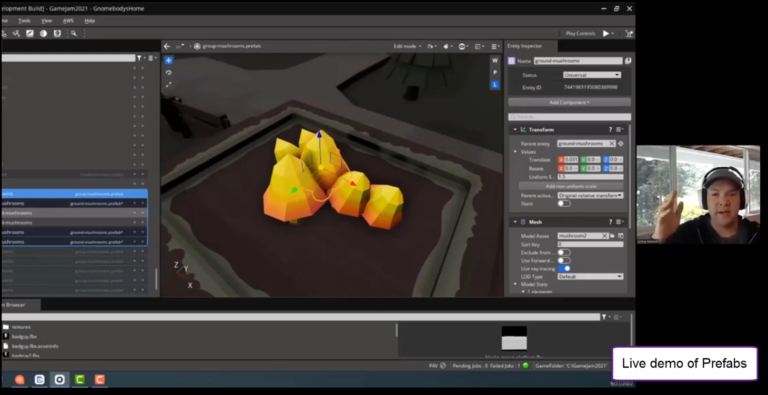

But as lead designer, I created comprehensive UX specifications and workflow diagrams. These documents were essential in guiding the development team and ensuring all use cases were addressed. I was fully responsible for executing, designing, iterating on, and conducting usability testing on all the workflows.

A significant part of my role involved cross-team collaboration. I worked closely with developers, leads, and PM’s regularly iterating on designs based on technical constraints and opportunities. I also conducted, analyzed, and integrated the user research feedback into our designs. This iterative process helped to continually improve the system as we learned more.

Communication was key in this project. I prepared and delivered show-and-tells to demonstrate progress and gather feedback. I also gave presentations to leadership about our direction and progress, ensuring alignment across all levels of the organization.

Our target users encompassed both existing and new users of the game engine. This included a range of developers, from individual creators to large AAA game studios. We were particularly focused on supporting several high-profile AAA games already in development.

Our business goals were threefold:

- Significantly improve the user experience for game developers working with our engine. By addressing known issues and introducing a more robust prefab system, we wanted to streamline the workflow and prevent the frustrating data loss issues they had been experiencing.

- Retain our existing user base. These developers had invested time and resources into learning and using our engine, and we needed to ensure that our improvements would enhance their experience without disrupting their ongoing projects.

- Attract new users to the engine. By offering a state-of-the-art prefab system, we hoped to position O3DE as a leading choice for game development, particularly for large-scale and open-world AAA games.

By focusing on these users and goals, we ensured that the Keystone project would deliver value not just to our immediate users, but to the broader game development community and to AWS position in the game engine market.